Artificial Intelligence (AI) has seen both periods of rapid progress and frustrating setbacks. One of the biggest blows to AI research came in 1973 with the publication of the Lighthill Report, a highly critical assessment of AI’s progress.

Commissioned by the UK government, the report questioned the feasibility of AI, arguing that it had failed to deliver practical results despite decades of funding. As a result, AI funding in the UK—and soon after, globally—was dramatically reduced, leading to what became known as the Second AI Winter.

This article explores the Lighthill Report, its criticisms, the resulting AI winter, and its long-term impact on artificial intelligence research.

FILES INCLUDED: VIDEO (full transcript below) & Lighthill_1973_Report

What Was the Lighthill Report?

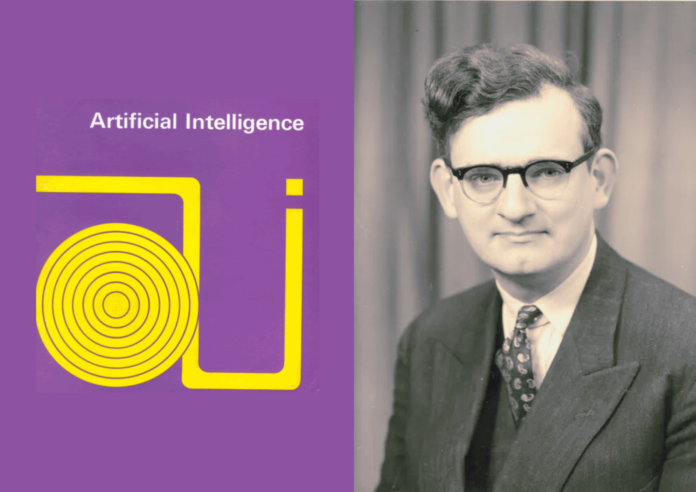

In the early 1970s, the British government wanted to evaluate the progress of AI research to determine whether continued funding was justified. They assigned the task to Sir James Lighthill, a professor of applied mathematics at the University of Cambridge.

His report, published in 1973, concluded that AI research had failed to achieve practical breakthroughs, particularly in areas like robotics, natural language processing, and general intelligence.

Key Criticisms in the Lighthill Report

-

AI Had Limited Real-World Applications

- Lighthill argued that AI systems could not handle real-world complexity.

- Early AI programs worked only in controlled environments and failed when applied to real-world situations.

-

Machine Learning Was Too Slow

- AI programs lacked the computing power to learn efficiently.

- Neural networks had been abandoned due to limitations exposed by Minsky and Papert in 1969.

-

AI Research Was Divided Into Isolated Subfields

- AI research was split into three areas, which Lighthill called:

✅ A-type AI (Problem-solving systems, like game-playing AI).

✅ B-type AI (Robotics and real-world interactions).

✅ C-type AI (Neural networks and brain-inspired models). - He claimed that only A-type AI (game-playing, logic systems) had made real progress, while B-type and C-type AI had failed.

- AI research was split into three areas, which Lighthill called:

-

AI Could Not Scale to Large Problems

- Lighthill pointed out that AI struggled with large-scale problems—an issue that remains a challenge in AI today.

- Programs that worked on small tasks failed when applied to complex real-world scenarios.

-

Government AI Funding Was a Waste of Resources

- The report recommended cutting government funding for AI research.

- The UK government largely agreed, leading to a major withdrawal of AI research grants.

The Immediate Consequences: AI Funding Collapses

The Lighthill Report had an immediate impact on AI research in the UK and beyond:

🚨 The British Government Defunded AI Research

- UK funding for AI and robotics programs was slashed, leading to the closure of several AI labs.

- The focus shifted towards traditional computing and rule-based systems.

🚨 The ALVEY Program Replaced AI Research

- Instead of AI, the UK invested in computational engineering, focusing on expert systems rather than general AI.

🚨 Other Countries Followed the UK’s Lead

- The U.S. and other European countries also cut AI budgets, leading to reduced interest in neural networks, robotics, and machine learning.

This funding collapse led to the Second AI Winter, a period of stagnation and reduced research activity that lasted from the mid-1970s to the mid-1980s.

The Second AI Winter: A Decade of Stagnation (1974–1980s)

The term “AI Winter” refers to periods when AI research funding dries up due to unmet expectations. The Second AI Winter (following the first one in 1966) was one of the worst setbacks in AI history.

1. AI Labs Closed or Shifted Focus

- Many leading AI laboratories shut down or shifted focus to traditional computing and rule-based systems.

- The Edinburgh AI lab and other UK institutions suffered deep funding cuts.

2. Neural Networks Were Abandoned

- In 1969, Minsky and Papert had criticized Perceptrons, and after the Lighthill Report, funding for neural networks disappeared.

- It wasn’t until the 1980s and 1990s that neural networks were revived.

3. Robotics Research Slowed Down

- Governments lost interest in AI-powered robots, leading to a decline in robotics research.

- Instead, efforts focused on simple automation and industrial robots (which did not use AI).

4. Reduced Interest in Natural Language Processing (NLP)

- AI systems struggled with language translation and speech recognition, leading to loss of funding.

- Research in NLP slowed down until the late 1980s.

Although AI research did not stop completely, progress was much slower due to lack of funding and institutional support.

How AI Recovered From the Second AI Winter

Despite the setbacks caused by the Lighthill Report, AI research slowly made a comeback by the 1980s.

✅ The Rise of Expert Systems (1980s)

- Companies started using expert systems, AI programs designed to mimic human decision-making.

- Systems like XCON and MYCIN proved that AI could have practical business applications.

✅ Neural Networks Were Revived (1986)

- Backpropagation, a breakthrough in training neural networks, reignited interest in machine learning.

- AI pioneers like Geoffrey Hinton led the resurgence of deep learning.

✅ Computing Power Improved

- By the 1990s, computers were faster and more powerful, allowing AI to scale beyond the limitations noted in the Lighthill Report.

Although the Second AI Winter slowed progress, AI research ultimately recovered and evolved into the powerful field it is today.

Lessons from the Lighthill Report and AI Winter

The Lighthill Report taught AI researchers several valuable lessons:

1. Hype vs. Reality: AI Must Deliver Practical Results

- One of AI’s biggest problems in the 1960s was over-promising and under-delivering.

- Today, AI researchers focus on practical applications first before making bold claims.

2. AI Needs Computational Power

- Early AI was limited by slow computers and small datasets.

- Modern AI thrives because of big data, cloud computing, and GPUs.

3. AI Research Requires Long-Term Commitment

- AI development takes decades, not just a few years.

- Governments and tech companies today invest billions in AI, understanding that progress takes time.

4. Neural Networks Were Not Dead—Just Waiting for a Revival

- The Lighthill Report dismissed neural networks, but deep learning became AI’s greatest breakthrough in the 21st century.

The Setback That Changed AI Research

The Lighthill Report (1973) and the Second AI Winter were major setbacks for artificial intelligence. They slowed down AI research for over a decade, causing funding cuts, lab closures, and a shift in focus away from general AI.

However, the lessons learned from this period helped shape AI’s comeback. By the late 1980s and 1990s, expert systems, machine learning, and neural networks revived the field, eventually leading to the modern AI revolution we see today.

While the Lighthill Report nearly halted AI progress, it also forced researchers to rethink their approach, leading to the more practical, data-driven AI advancements we rely on today.

ADDITIONAL RESOURCES: Transcript of the Lighthill Debate (Video):

The Full Transcript of the Debate

Host: Good evening and welcome to the Royal Institution. Tonight we’re going to enter a world where some of the oldest visions that have stirred man’s imagination blend into the latest achievements of his science. Tonight we’re going to enter the world of robots. Robots like Shakey, developed by the Stanford Research Institute. Shakey is controlled by a large computer. He’s directed through a radio antenna. Through a television camera he gets visual feedback from his environment. The box appears on the monitor screen. The computer analyses the traces which appear on the visual display until it can interpret them as an object it recognises. Shakey gets tactile feedback through his feelers. He’s able to move boxes with his push bar. He’s programmed to solve certain problems that can be contrived in his environment. To choose, say, an alternative route to a certain point when his way has been blocked. Shakey is unquestionably an ingenious product of computer science and engineering. But is he anything more? Is he the forerunner of startling developments which will endow machines with artificial intelligence and enable them to compete with and even outstrip the human brain? Robots like this one in Stanford University’s computer science department are able to perform certain tasks. But will robots ever be able to perform a wide variety of tasks? To learn from their experience? To use what they’ve learned to solve these new problems beyond those envisaged by their human programmers? Or will their so-called intelligence, their performance, remain forever at the level of a three-year-old child at its first games?

One man who’s pessimistic about the long-term prospects of artificial intelligence is our speaker tonight, Sir James Lighthill, one of Britain’s most distinguished scientists. He’s a Lucasian Professor of Applied Mathematics at Cambridge and has worked in many fields of applied mathematics. He’s a former director of the Royal Aircraft Establishment at Farnborough. Last year, he compiled a report for the Science Research Council which condemned work on general purpose robots. Not surprisingly, scientists who’ve been working on such robots have reacted strongly in defence of their field. Three of them are here tonight to challenge Sir James’ findings. After they’ve had their say, the discussions will be open to bring in members of the audience here. Of many mathematicians and engineers, computer scientists and psychologists among them, their contribution will be particularly welcome.

Before Sir James Lighthill makes his opening speech, I should like to introduce the men who will lead the debate against him. Donald Michie is Professor of Machine Intelligence in the University of Edinburgh. His laboratory is the only one in this country engaged on large-scale robot research. John McCarthy is Professor of Computer Science at Stanford University in the United States, another great centre of robot research. He’s the director of Stanford’s Artificial Intelligence Laboratory and has flown over especially for this programme. And Richard Gregory is a Professor in the Department of Anatomy at Bristol University. His concern with artificial intelligence arises out of his work on perception. Before going to Bristol, he was a founder member of the Edinburgh team and helped launch its robot project.

And now the moment has come to meet our Principal Speaker, Professor Sir James Lighthill.

Professor Sir James Lighthill: I’ll begin by making a few distinctions. Between automation, which is replacing human beings by machines for specific purposes, that has made great progress in the 20th century and where the replacements have been put into effect humanely has led to general benefit, improved productivity, creating higher standards of living. And a general purpose robot, an idea that has often been described involving an automatic device that could substitute for a human being over a wide range of human activities. That’s what I shall argue is a mirage. Automation is the province of the control engineer. He designs feedback control systems that act to reduce any change in some quantity from its desired value. For example, in this automatic aircraft landing, the throttles move so as to reduce changes in speed of the aircraft, and other controls reduce deviations from the desired glide path.

Increasingly, an important role in automation is played by computers. A computer is an extremely fast, reliable, and biddable device for manipulating numbers and similar symbols according to rules clearly prescribed in a program. Computers are tools of the greatest value in a very wide range of human activities, including all branches of scientific research, and we’ll see examples of that, and all branches of engineering. The control engineers have made excellent use of computers in automation, for example, in numerically controlled machine tools that will cut metal parts to geometrical shapes defined by equations in a program. One of the important new branches of science is computer science. Workers in computer science constantly improve our repertoire of things that can be done with computers, not just arithmetic and geometry, also algebra and calculus and logic.

Logic by computer means manipulating symbols, symbols representing different statements, in accordance with a program, to find out what can be deduced from what, and so on. Advanced automation can mean automation making use of the computer’s full logical potentialities developed by computer scientists. For example, in a modern computer-aided design for an electrical printed circuit, the computer’s role in identifying all these current paths is still very specialized, replacing human beings for a very specific purpose, but most effective. Other automatic devices exploiting the logical capabilities of computers are used to organize scientific data through data banking and retrieval. For example, in a data bank of different properties, boiling point, latent heat, etc., of hundreds of thousands of chemical compounds.

I’ve been talking about computers and their benefits to us in a lot of fields, but I must come to the other side of the case. Computers have been oversold, understandably enough, as they are very big business indeed. It’s common knowledge that some firms bought computers in the expectation of benefits which fail to materialize. My concern tonight, however, is with the overselling of the longer-term future of computers. The scientific community has a heavy responsibility to put forward its carefully considered view of the facts to avoid the public being seriously misled. Just as the U.S. National Academy of Sciences did in 1966 when it reported that enormous sums of money had been spent on the aim of language translation by a computer with very little useful result, a conclusion not subsequently shaken.

Failures continually occurred also in computer recognition of human speech or handwritten letters and in automatic proving of theorems in higher mathematics. But our subject tonight is robots, and we must identify what they are. Several groups of able computer scientists have for many years been adopting a particular point of view regarding their work and given it a name, artificial intelligence or machine intelligence. The idea is to operate from a sort of bridge between studies of how brains of living creatures work and studies of how computer programs and automatic devices based on them work. This interesting point of view has been current for over 20 years. Please notice that this view does not just mean that people who study brains, psychologists and neurobiologists should use computers. Those, as I mentioned earlier, are used effectively in all branches of science now. Nor does it mean another obvious fact that in writing programs for computers we are influenced by introspectively considering how a human brain would carry out the logical processes required.

The idea of artificial intelligence means that besides doing these two things, we engage in a definite bridge activity between advanced automation and computer science on the one hand and studies of brains of central nervous systems using computers on the other. The bridge activity proposed is building robots. I use robot not to mean an automatic device aimed at replacing human beings for a specific purpose in an economical way. A robot, rather, is an automatic device designed to mimic a certain range of human functions without seeking in any useful sphere of human activities to replace human beings. This robot, which you’ve already seen from the Stanford Research Institute, is one of the most sophisticated in current operation. I’ll ask, why should robots be built and studied? There are at least two serious answers to this question.

First, that generalized information on automatic devices may result, which can be of use in a wide range of specialized automation problems. Second, that a device which mimics a human function, such as how we avoid an obstacle, may assist in making a scientific study of that function. I shall argue that these were eminently good and sufficient reasons for embarking on the work 20 or 10 years ago. In practice, however, the line of approach has led to somewhat disappointing results in these respects. We have acquired rather little generalized information applicable to a wide range of automation problems. Instead, we find that specialized problems are best treated by specialized methods, and I shall try to explain why that is. Similarly, the sciences of psychology and neurobiology have benefited not from robo-work in general, but from those computer models that take into account really extensive bodies of experimental data on psychological behavior or on nerve cell networks in the brain. Before I expand on these reasons for a certain disenchantment with robot research, I shall predict that people nevertheless will go on building them. At all periods of history, the human imagination has been captivated by the idea that the mysterious arts whether of the sorcerer’s cell in earlier times or the scientist’s laboratory today might be used for a process of, as it were, artificially giving birth. Whether for this reason or not, a large section of the public finds the very idea of robots thrilling. It wants robots. It is prepared to pay for robots, if only as entertainment.

Money was being made at the end of the 18th century not only from mechanical dolls of great ingenuity, but also from exhibiting large, apparently automatic chess-playing robots. Their capabilities actually arose, like those of the Daleks today, from the presence of a man skillfully concealed inside. Science fiction in all the media has helped to intensify this old fascination with robots as artificial beings artificially given birth. Modern robots certainly seem to imitate children in some respects. They play games. They do puzzles. They build towers of bricks. They recognize pictures in drawing books. Scientists may well find building them attractive either because the very idea exercises its old fascination on them or because the public, as represented in funding bodies, still feels that fascination enough to be prepared to pay. On this, I’ll say no more. The last thing I want to do is to argue against the entertainment industry. What I have said, however, explains my description of the general purpose robot as a mirage, meaning an illusion of something that may be strongly desired. Now I must speak of the fundamental obstacles to developments on those lines.

Every existing robot operates in an extremely restricted world, a sort of playpen. That limited set of objects which are to be processed by the robot’s computer program is often referred to as the program’s limited universe of discourse. Such a limited universe of discourse may be a so-called tabletop world where block-stacking jobs and other eye-hand operations may be carried out, or it may be a drawing book for visual recognition jobs in two dimensions, or a board for chess or some other game or puzzle. Whether or not there are psychological motives for a choice of an extremely limited playpen universe within which the robot operates, there are certainly practical reasons. The whole of a very large computer is being used to organize the sequence of operation of one of these robots. If the universe of discourse within which it operates were made a lot bigger, the size of computer required would increase astronomically. This is often referred to as the combinatorial explosion. The combinatorial explosion means an explosive increase in the computer power required to deal with moderate increases in the so-called knowledge base which the computer has to keep organized. It’s not the movements of the robot that require these huge computer powers. It’s organizing the logical analysis needed to decide its sequence of operations.

A so-called self-organizing program is a program that can organize the sequence of robot operations without clues fed in from the fruits of human intelligence. Experience indicates that any self-organizing program must continually cause long searches to be made through the computer’s store of data regarding the universe of discourse. A typical search might be for that combination of items and their associated attributes which satisfies some relationship necessary in solving a problem of what to do next. The combinatorial explosion means that the length of search grows explosively with an increase in the universe of discourse, essentially because that length of search depends on the number of ways in which items in the store of data can be grouped according to particular rules and that number of ways becomes enormously large extremely fast. Doubling the universe of discourse may make the searches thousands of times longer. All this means that any big increase in computer power that will come in the future will allow these self-organizing programs to handle only a moderately increased size of universe of discourse.

All attempts at general problem-solving programs, whether concerned with theorem-proving or with the so-called common-sense problems that arise in most robot situations, have been and must continue to be severely limited by the combinatorial explosion in the size of problem which they can tackle. Through repeated failure to get round, these difficulties led to programmers being forced to adopt an expedient known as the heuristic. This is a method of constantly guiding the search by, as it were, telling the robo when it is warm, and when it’s getting warmer, and so on, a procedure that we all know shortens any search. The heuristic is a numerical measure of how warm the computer has got, that is, of how favorable to the aims of the program is the current configuration within the computer store. It is purely human intelligence and human experience that assigns this heuristic this evaluation function.

For example, a specialized program for playing chess involves such a heuristic based entirely on human knowledge and experience of how to evaluate a chess position. This numerical evaluation includes basic elements like an estimate of the advantage of any difference in the white and black forces with the usual weightings attached to the values of different pieces like a knight having about three times the weight of a pawn, and attaches also suitable weights to space control elements like the added up number of squares under attack from each one’s pieces with extra weight for any center squares, and similar numerical estimates of the extent of development of one’s pieces, the degree of exposure of one’s king, and so on and so forth. Because of widespread interest in finding out how good a computer might be in a complicated game like chess, devoid of any chance element, a great deal of effort by chess grandmasters, including the former world champion Botvinnik, has been expended on getting these evaluation functions better and better. Then the computer conducts at each move a long search to find a sequence that will give it the best achievable position three or four moves ahead, assuming that its opponent makes its best replies, where best, of course, means only best from the point of view of the evaluation function.

This line of research was pursued actively for over 20 years, so the results give a good indication of what can be achieved with special purpose automation when a very large amount of human knowledge and experience about the problem domain or universe of discourse, still quite a modest one in size, has been incorporated into the program. The programs play quite good chess of experienced amateur standard, characteristic of county club players in England, although chess masters like our own David Levy beat them easily. This story is typical of the whole range of advanced automation in general, which has made reasonable progress when directed towards some specialized purpose concerning which a very large amount of human knowledge can be incorporated into the program. On the other hand, general purpose programs cannot be designed in this way, and in any large, variegated universe of discourse they fail by enormous margins, owing to the combinatorial explosion. The general purpose robot, then, is a mirage.

The science fiction writers, possibly others, will try to keep it shimmering or appearing to shimmer there on the horizon in front of us, and there’s something in most of our minds that wants to believe it’s there. So many people may feel disappointed to hear it’s not, although really they should feel encouraged by evidence for the uniqueness of man, the uniqueness of the human race and of human brains. The many unique features of human beings include emotional drives and remarkable gifts for relating effectively with other human beings, as well as powerful abilities for reasoning over an extraordinarily wide universe of discourse. There is no reason why any of these features should be realizable in a computer of relatively simple organization, driven by even a very complicated program that has been read into its store. No reason why such a combination can begin to approach what the vastly more intricate networks of nerve cells inside human skulls can do. Neurobiological research on the visual cortex has shown the extraordinary efficiency with which specialized networks of specialized neurons play their part in analyzing visual fields.

It’s probable that the extraordinary self-organizing capability of the cerebral cortex has resulted from the evolution of specialized neural networks of extreme complexity, which there is no question of imitating with a programmed robot. Research on many different aspects of brain structure and function will continue and will increasingly be helped by computer-based theories adapted to the actual neurobiological data and problems and to the results of experimental psychology. At the same time, advanced automation in various specialized problem domains will forge ahead. However, the gap between these two fields will remain too great for the attempts at building a bridge between them to be effective. Always there may be some people who try to make us think we can see that old general-purpose robot shimmering there on the horizon, but he’s a mirage.

Host: Thank you very much, Sir James. I suspect that a number of people will be rather sad to hear that robots are a mirage, and we have here at least three people who have good reason to believe they’re not. For instance, Professor Michie runs a laboratory in Edinburgh which is one of the world’s leading centers for robot research. Don Michie, would you like to start the discussion off?

Professor Don Michie: I’m certainly not going to take you up, Sir James, on the term mirage, and I think to do so might be presumptuous in this company. Professor Gregory is one of the world’s leading authorities in optical illusions of all kinds, and presumably that includes mirages. But I am going to take you up quite sharply on the term general purpose, because I have the feeling that this is very near the crux of the matter, and I have a suspicion that under your term, general purpose, it’s possible that there are two quite distinct and two quite important notions snuggling under the same blanket, one notion being the notion of an experimental device, a research prototype, which one might more properly call a research purpose device, and I would be happy to talk about research purpose robots, by which one means devices which have no other purpose but to be used by scientists to advance knowledge in a particular new domain to test feasibility and to investigate principles. It’s very close to the idea of the experimental prototype. I would say that the primitive flying machine of the Wright brothers would be a good example of such a device, certainly general purpose. And certainly the work done with computer-controlled robots in the various artificial intelligence research laboratories around the world in the last five or six years, I think could fairly be described in those terms, research purpose robots.

The other concept which I think comes under the same term of general purpose, and may be confused with it, is the notion of versatility, by which one means the ability to re-instruct, re-educate almost, a device rather quickly and rather easily and rather conveniently from the point of view of the human user. And this property of versatility is of extreme interest to workers in the field of artificial intelligence, and it’s not entirely without relevance in the future, perhaps in the industrial applications of robotics to assembly line operations and similar tasks. One of the problems in the industrial context is the problem of short runs, where a given product has its specifications changed every few weeks or every few months, requiring radical retooling and writing off of assembly equipment. And there’s a good deal of interest at present in industry in how to incorporate versatility in such devices. Research on versatility in programming systems of a complex kind, which have to deal with fragments of the real world, is one of the studies which may lead towards that end, quite apart from its own intrinsic interest. Now, in Edinburgh, we have made some identifiable steps in that direction.

The film that I’m going to show now is reasonably described, I think, as the Edinburgh research purpose, versatile assembly robot, locally described as Freddy. That is Pat Ambler on the left, Rod Burstall, who supervised this work in the last years, putting a heap of parts on the platform. This is the beginning of the execution phase of the program. The previous teaching phase, which occupied altogether two days, isn’t shown here. And the parts can be dumped at random and have been. And in fact, an extra part, which doesn’t belong to that assembly kit at all, but belongs to a ship assembly kit, this one has been thrown in for good measure. That is the oblique camera that is responsible for building up an internal model of the overview of the whole platform. When it comes to detailed examination, the overhead camera is used, as here.

In a moment, that outline will be replaced by an approximation in terms of line segments. And there are elaborate data structures in the machine memory, which is a large part of the research interest, which form compact and convenient descriptions of the messy images seen by the television camera. Having identified that as a car body, it’s being picked up and put in the assembly station in a stereotyped position, ready for the second phase of the program, which we’ll see later, and which has less of interest in it from an artificial intelligence point of view. But the points of interest in this first part of the program are to do with constructing internal descriptions of messy and complex external phenomena, including, for example, jumbled heaps of that sort, as the basis for identification where possible, and where not possible, appropriate action. In the case of a heap, there’s a whole repertoire of strategies available, of which the first will be to attempt to identify a protuberant part, and then an attempt will be made to pick it up.

These are potential hand positions, there’s a little bit of internal planning going on, to try and find positions for placing the two hands which will be suitable for grabbing that protuberant part without fouling any of the other objects. And now, having selected a pair of positions, we’re doing a pick-up, the system will now look around the platform, that little twitch was to shake off anything which might have been associated, picked up by mistake, with the rest. Having found an empty place that’s being put down, it will now be examined by the overhead camera, a caricature, a simple description made, and this will be matched through what the computer memory holds in the way of descriptions, and identified as a wheel, hopefully, and then picked up and put in a suitable place preparatory for the final assembly.

We’ve jumped a bit and we’re now nearing the end of this part of the program, this is the last wheel to be identified, and now all the parts are laid out, and the second part is just about to begin. The second part perhaps looks a little smoother, it’s very much less interesting, it’s done blind, no use of vision at all, quite considerable use of touch. For this purpose, the system has a primitive workbench, and that’s a little vice, it’s about to close the jaw on the wheel in order to clamp it in a more or less standard position. The only really high-level routines available as commands to the programmer in instructing the putting together part are two, which we’ll see illustrated in a moment. These little feeling operations are simply updating its internal model to correct the dead reckoning.

In fitting the axle into the hull, one of the high-level routines was used, a spiral search in which it goes round in a widening spiral, prodding, and when it meets no resistance, pushes it home. The next operation is to insert the axle into one of the holes through the car body. And this is spiral search again, which has been successful. The difference between the two parts of the program, the second part is fairly conventional programming, simply taking advantage, perhaps, of a reasonably various and well-documented library of robot support software, but the difference between that and the earlier part, the vision and the layout, can be illustrated by the effects of interference. If things go wrong during the assembly phase, the one that I’m saying is far the less intelligent of the two, insofar as that word should be introduced at all, if you at this stage were to knock the partially completed assembly onto the floor, the program would never recover from it. But if you do things like that in the earlier stage, it has a sufficiently elaborate model of its world and a sufficiently broad repertoire of strategies, in general, to be able to recover and push the job through to completion. So in that sense, one derives a valuable degree of robustness from the employment of some of these techniques.

Well, this is the final assembly test. Since then, a slightly more advanced tour de force has been attempted and, in fact, succeeded, which was to teach the system to approach a jumbled heap consisting both of car parts and ship parts, completely mixed up, and successfully disentangle them, identify them, and construct one finished car and one finished ship. There are, in that innocently, deceptively simple-looking program, a number of techniques which we regard as artificial intelligence techniques. We have a number of fairly concrete ideas about how some of the crudities should be improved, and more intelligent features and considerable shortening of the instruction time introduced. My main thesis is that work in this area is work in an area of science which has an existence in its own right, that artificial intelligence is indeed a subject with its own purposes, its own criteria, and its own professional standards, and it is not to be identified with specific application areas.

Host: Well, Sir James, what about the versatile research purpose robot? Is that a mirage?

Professor Sir James Lighthill: Well, I thought that, in many respects, Professor Michie’s film was a good illustration of the description that I’d given of the robot as a device designed to mimic a certain range of human functions without seeking any useful sphere of human activities to replace human beings operating in a playpen world with its toy car and its toy ship, and a small universe of discourse, and therefore able to solve the logical problems that were involved in organizing the program. I would certainly agree with Professor Michie’s implication that, in certain factory jobs, one can create an artificially small enough universe of discourse so that one can think in terms of carrying out this type of logical organization of the task. Of course, against that is the fact that those who are involved in industrial automation are already doing this by their own methods. It’s not the people who work in laboratories called AI, laboratories that have a monopoly of thinking how to organize tasks in factories, of how to carry out these operations in small universes of discourse.

Professor Don Michie: Just a brief interruption, Sir James. Industrial robots are becoming quite common in factories, but they do have one thing in common, and that is that up to the present date, no use of visual sensing has yet been achieved on a practicable scale, and very little tactile sensing. I thought for a moment you were implying that there was not likely to be a chain of beneficial influence between research studies of this kind and the factory robotics of a few years hence. We still have to incorporate, and there are members here of industrial automation groups who can confirm it, some of these facilities in the industrial environment. But there are firms that are doing very good visual pattern recognition and analysis by what I would call relatively conventional data processing methods, firms like Image Analysis, Computers Limited.

Host: Professor McCarthy, one of the things I’m finding difficult to understand is this distinction between advanced automation and what you call artificial intelligence. Can you define for us what this distinction is? What is artificial intelligence?

Professor John McCarthy: Okay. Artificial intelligence is a science, namely it’s the study of problem-solving and goal-achieving processes in complex situations. It’s a basic science, like mathematics or physics, and has problems distinct from applications and distinct from the study of how human and animal brains work. It requires experiment to carry it out. Now it involves about a very large number of parts to it, of which I will mention precisely four. One of them is these processes of search, which are dealing with a combinatorial explosion. Now it seemed from what you said that you had just discovered that as a problem, but in fact the very first work in artificial intelligence, namely Turing’s, already treated the problem of combinatorial explosions, and there has been a very large part of the work in artificial intelligence, especially game playing, has dealt with that. The next problem is the representation of information internally in the machine, both information about particular situations that the machine has to deal with, the representation of procedures, and the representation of general laws of motion, which determine the future as a function of the past. A third problem is advice-giving, how we are going to instruct the computer or communicate with it. At present, programming that is influencing a computer program is as though we did education by brain surgery. This is inconvenient with children and is also inconvenient with computer programs. Progress is being made on this.

The fourth that I want to mention can be called compiling, or now the word used is automatic programming, but in an extended sense beyond the way it’s used normally in the computer industry, and that is going from information that determines how something should be done to a rapid machine procedure for efficiently carrying this out. This is one of the major topics. Now I should remark with regard to all of these topics that they can be treated independently of applications and independently of how the brain works, and I would be perfectly glad to treat any one of these that you choose. On general purpose robots, I’d like to remark that in the strong sense of a general purpose robot, one that would exhibit human quality intelligence, if not, so to speak, quantity, but would be able to deal with a wide variety of situations, the situation is even in worse shape than you think, namely, even the general formulation of what the world is like has not been accomplished, so that even if you are prepared to lead the machine by hand through the combinatorial explosion, that is, to tell it which things to do next, you still cannot make it with the present formulations decide how to solve a complex problem.

Now this, in fact, has turned out to be the difficulty, not the combinatorial explosion. The common sense programs have occupied relatively little computer time in the areas in which they were capable of doing, or at least many of them have anyway, but simply have too limited a formulation. Now part of this is due to a defect in current systems of mathematical logic where the systems are designed to be reasoned about rather than to be reasoned in. Now I want to ask you a question, or maybe it’s a rhetorical one, which is in the documents that you received before you wrote your report and in the comments that you received after you received the report, almost everyone made the point that AI was a separate subject with goals of its own and not intended to be a bridge between the other things.

Professor Sir James Lighthill: I would like to answer that question, I think it’s a very important question. You see, in this country there are a large number of first-rate computing science laboratories which have preferred not to call themselves AI laboratories, but have concerned themselves with what Professor McCarthy calls the central area of his field, namely the study of problem-solving and goal-achieving programs. And these have been tackled in their own right as fundamental computer science. Many of the points that he’s mentioned come in, for example, search in the whole field of information retrieval, compiling, where our fine computer science laboratories have been much involved in producing advanced programming languages. I was grouping with advanced automation for a very good reason, because extremely often one finds that the stimulus of a really important practical problem in automation is the thing that causes solutions, new solutions, to be found to these questions. And these add it to the repertoire of what computer scientists can do. Now, what are the arguments for not calling this computer science, as I did in my talk and in my report, and calling it artificial intelligence? It’s because one wants to make some sort of analogy. One wants to bring in what one can gain by a study of how the brains of living creatures operate. This is the only possible reason for calling it artificial intelligence instead.

Professor John McCarthy: Let’s see. Excuse me. I invented the term artificial intelligence. I invented it because we had to do something when we were trying to get money for a summer study in 1956, and I had a previous bad experience. The previous bad experience concerns occurred in 1952, when Claude Shannon and I decided to collect a batch of studies, which we hoped would contribute to launching this field. And Shannon thought that artificial intelligence was too flashy a term and might attract unfavorable notice, and so we agreed to call it automata studies. I was terribly disappointed when the papers we received were about automata, and very few of them had anything to do with the goal that at least I was interested in. I decided not to fly any false flags anymore, but to say that this is a study aimed at the long-term goal of achieving human-level intelligence. Since that time, many people have quarreled with the term, but have ended up using it. Newell and Simon, the group at Carnegie Mellon University, tried to use complex information processing, which is certainly a very neutral term, but the trouble was that it didn’t identify their field, because everyone would say, well, my information is complex. I don’t see what’s special about you.

Yes. Well, Newell and Simon, I think, are a good example of people who have moved a little bit towards the problem of trying to do psychology. They’ve been actually saying, how do human beings solve simple problems, and we all try to do a theory of this, and this is obviously a very desirable thing to do also. But I’m trying to suggest that we would be much clearer in what we attempt if, when we are trying to do psychology and neurobiology, we think of ourselves as psychologists and neurobiologists, and work with all the other guys in the field. And when we’re trying to do advanced automation and computer science, we work with people like control engineers, who developed an awful lot of experience of how to do advanced automation well, and also with the key problem of how to actually get it into practice, all that business of humanely introducing it, and so on, that I mentioned at the beginning of the talk.

Host: I think this is where we must ask Richard Gregory to come and help us, because his research is in perception. To what extent have the robot studies of Michie and McCarthy helped in this work, Richard?

Professor Richard Gregory: Well I want to say that it’s the general concepts which are coming out of artificial intelligence which are having an impact in psychology, rather than the specific programs, for example on neural nets, which you mentioned. And I go a little further than that. I may be exaggerating a little, but let me put the point. I think since behaviorism started in about 1900, and then Skinner, and the stimulus-response paradigm to psychology, experimental psychologies on the whole were regarding human beings as examples of advanced automation, where you have a stimulus coming in, a response coming out, and there’s a black box in the middle, and that’s it. Now what I think is becoming very apparent is that human beings are not at all like that, that we have a vast amount of data store inside us, that we have extremely noisy, often directly not relevant information available to us that we make rather good on the whole decisions. We act extremely reliably with poor input. Now what’s happened I think with the robot studies, and I think Donald was getting at this, is that we were all shocked and amazed how difficult it was, because we were misled by the total inadequacy of psychological theory and the emphasis that was put on stimulus-response. Now what it’s turning out, I think, is that the stimulus is not directly controlling behavior, it’s rather calling up, generally speaking and in normal situations, an appropriate internal model, map, hypothesis of the external world, and it’s this that we act upon.

So it’s not stimulus-response, it’s rather a certain amount of rather grotty information, a hopefully adequate internal model, and then the response based on that, much as a hypothesis in science enables one to make a decision, but the hypothesis is the result of a great deal of past information and generalization, which has been logically organized, and then the decision is made far more on what is internally represented than on what is available at that time, either available to the eye or to the telescope or to the microscope or to the electrical instrument of an engineer. I think this is what’s happened. So the emphasis on internal data and how it’s organized logically is what’s happening from the robot research.

So I think to say that this is a business of models and neural nets is what we thought ten years ago, and it’s what we’ve very much moved away from. Now, to sort of finish this a little bit, the point about intelligence is this. It exists because we’re intelligent. We have 10^10 components in a box about the size of a football on top of our shoulders. Ten to the ten component is a lot, but an awful lot of that is used up in vegetative functions like my tongue having to waggle about and this kind of thing in order to communicate. The actual number of neurons responsible for intelligence may be very, very much smaller than that. I see no reason why we cannot, in fact, make brains because they exist physically. When you say the brain is unique, you at one time said it’s unique because it’s big. You then made remarks that there are certain circuits in the brain which we can’t replicate. Now, I would like to have an argument to show why this pessimism is justified. It seems to me pure pessimism or metaphysics.

Professor John McCarthy: It’s worse. It contradicts a mathematical theorem. I didn’t really expect that I would ever…

(Audience laughter)

Host: Let Sir James answer.

Professor Sir James Lighthill: Well, Professor Gregory seemed to make two almost contradictory points. First, he said that neural nets don’t matter, and finally, he said that in the end, they’re the thing we ought to be researching on most. I think he’s been a bit unfair to experimental psychologists because I think they have been working on internal stores of information. They’ve been working on short-term memory and long-term memory and these things, and they’ve used computer models to find out the relation between these different things. But of course, I do agree with his statement that we have learned a lot from the research in artificial intelligence, essentially finding out how difficult it was. We were all shocked and amazed to find out how difficult it was, namely, to extract information from noisy pictures, from sense impressions of the real complex world, and the evidence that we in fact do it by comparing with some sort of internal model, and this is a key feature that has come out of the work. Of course, it’s arguing against the hopes for general-purpose robots because they would have to have such a very complicated internal model, such a large internal universe of discourse that they’d be working with in order to identify what they were seeing in the real world.

But now I’ll come to his last point where he comes back to neural networks. I mean, I pointed out that the difference between a current computer architecture, which by comparison with the cerebral cortex is a very simple architecture, and all the complication is built into the program. I say that there’s no reason to suppose that that type of architecture plus program can begin to approach what the vastly more intricate networks of nerve cells inside human skulls can do. And of course, there’s a theorem that says it can. I don’t think a theorem that says it can in a way that can be realized in a time that is acceptable because of the difficulties of a combinatorial explosion. I mean, the theorems for which Professor McCarthy is justly famous and Professor Robinson and others have pointed out that problems can be solved by algorithms, but the algorithms all involve enormous lengths of time with any reasonably sized universe of discourse because of the combinatorial explosion.

Professor John McCarthy: No, no, you’re confused.

(Talking over each other)

Host: But could we have Richard Gregory first of all to see whether this point has been answered about whether it is possible to construct an artificial brain.

Professor John McCarthy: But there’s a mathematical question we might succeed in answering it…

Professor Richard Gregory: Well, first of all, I’d like to know what sense you felt what I was saying was self-contradictory. I’m not saying one shouldn’t study neural nets, but I think… I mean, you said there was nothing ten years ago, but the actual fact there’s been some quite good work on new ideas of how neural nets can achieve specific tasks in the last few years. I mean, it is an active field of research at the moment.

Professor Sir James Lighthill: I mean, you said there was nothing ten years ago, but the actual fact there’s been some quite good work on new ideas of how neural nets can achieve specific tasks in the last few years. I mean, it is an active field of research at the moment.

Professor Richard Gregory: Yes, I’d like to submit that perhaps the concept of constraints is important here, that if you have a system which is following logical operations, it has to have physical constraints corresponding to the logical steps required to produce the solution, whereas the network such as Birl’s work was more on whether it’s going to, so to speak, catch fire, run away with itself, this kind of thing, a very, very crude work. I mean, it was brilliant at that time, but now it looks terribly crude because it isn’t concerned with the state of a net for the specific problem. It seems to me now the emphasis is on the logic of the problem. The next question will be how the physiology carries it out, but we haven’t yet even begun to answer that question. Now, what the robot stuff is beginning to do is to show how it can be carried out with electronics, and this is a lead, I think, to how physiological research may go when the cognitive process has become respectable within physiology, which is only just happening. The respectability, I think, is coming with the robot research. It’s making the logical cognitive processes scientifically respectable. This is a very great thing it’s doing.

Professor Sir James Lighthill: For different functions, I think the answer is different. Where it comes to simpler parts of the brain, like the cerebellum, I think it is already beginning to be possible to identify the function of neural networks, but I did feel that the cerebral cortex is incomparably more difficult.

Professor Richard Gregory: That’s why we need the robot research, is my point.

Host: It’s extremely difficult. You are not saying that there is any fundamental reason why it’s impossible, it’s just extremely difficult.

Professor Sir James Lighthill: Well, I do feel that, I mean, my neurophysiologist friends tell me that contemplating the complexity of the extraordinary random appearance of the connection of all the nerve cells in the cerebral cortex makes them feel that it is quite hopeless to attempt an analysis.

Host: Yes, Professor McCarthy, you wanted to say something earlier.

Professor John McCarthy: Right, I would like to make it clear what the theorem is, not due to me. There are several theorems, as a matter of fact. The first one is due to McCulloch and Pitts, which was that 1943, that a certain kind of neuron that they were fascinated with could exercise any logical, do any logical calculations. Another theorem along the same line was in Minsky’s PhD thesis, in which he showed that any element that had essentially what amounted to negative resistance could do it. Now, the simulation theorem would say the following, that the time required to simulate a device with 10 to the 10th components would be simply proportional to the number of components, provided you have a large enough memory, that is a 10 to the 10th element memory, to do the table lookups in. Now, one certainly would not advocate having an intelligent machine that would do this by simulating the neural net in the brain. In the first place, you can’t find out what the neural net is, and in the second place, there are almost certainly better ways of doing it, since the neural net in the brain is quite inefficient. But nevertheless, there are some mathematical theorems that say that the time required would really be only linear in the number of components.

Professor Sir James Lighthill: But that is only if you can unravel this extraordinarily complex network and decide how it does it, and I feel that is not so. Minsky’s perceptrons and so on were obviously a very interesting investigation. It’s not certain that the neurons are all of this type. As I say, the ones in the visual…

Professor John McCarthy: Minsky’s against perceptrons.

Professor Sir James Lighthill: Yes, I know. He’s written a book recently which sums up and says that they haven’t got us anywhere. Well, that’s unfortunate. And on the other hand, the detailed work on the visual cortex has shown that various specialized hardware, as the computer architect would speak, is involved in the discrimination between edges, vertical edges, discrimination, distance, and so on.

Host: Donald Michie?

Professor Don Michie: I wonder if I could follow the combinatorial explosion just a little, and first of all ask whether the problem of programming a computer to play chess is a reasonable instance of the kind of difficulty that you have in mind. I think, in fact, you quoted it as an instance. There’s an alternative of about 20 moves, 20 legal moves at each stage, so that you can work out, and my colleague I. J. Good has worked out, that the total number of possible chess games is of the order of 10 to the 120, which is more than the number of elementary particles in the observable universe. So that if you want combinatorial explosions, that’s quite a nice big one. There are two ways, two lines of approach that artificial intelligence people attempt to use to damp off and combat the combinatorial explosion.

Roughly speaking, they can be grouped into syntactic methods of cutting down this wild branching ratio on the one hand, and on the other hand, and in the long run, much more significant and more promising ways of building semantic information into the program in order to cut whole branches of the search tree. Most of the progress that has been made with computer chess so far, in fact almost all of it, would come under that first and rather primitive category, and in spite of that, I think you said yourself, the present level of computer chess is perhaps a middling club player, you said an experienced amateur. Have you, first of all, read the article in the June issue of the Scientific American, where the first program with semantics built in has been described and looked at the quality of the game that’s there cited? Secondly, you mentioned David Levy and you mentioned Botvinnik, the former world champion. Do you know about David Levy’s bet, and do you know about Botvinnik’s comment on it?

Professor Sir James Lighthill: Yes, I do, and of course the interesting thing is that this…

Host: Well, could one of you tell us…

(Audience laughter)

Professor Don Michie: Well, David Levy is an international master, and in 1968 he wagered a thousand pounds against a consortium that no computer program would beat him across the board in a 10-game match before 1979. It had to be done by the end of 1978. I can reveal the identity of the consortium. It consists in fact of Seymour Papert, John McCarthy, and myself. Now, it seems to me that if your pessimism is as deep-rooted as you wish us to believe, and the power of the combinatorial explosion, everything that you’ve stated, you should be ready to double that stake.

(Audience laughter and applause)

Professor Sir James Lighthill: I picked on chess because it’s an area where one can put in a maximum amount of human knowledge and experience of something that human beings have been very active in for centuries, and one can feed in through the heuristic as much as possible. The heuristic has been the main method of reducing the impact of the combinatorial explosion, and even in spite of this, one is able to reach, in a fairly modest universe of discourse, the chess board with its 64 squares, one is able to reach the kind of levels of play that we’ve described. The program that you saw, in fact, involved not a complete search of every possibility in the tree. There was a rejection of possibilities at an early stage if the position started deteriorating fast, and so a certain selection from the more promising lines is made in this program, and the programs have been constructed with a very great deal of ingenuity. It’s been one of the classic problems in computer science, something that everyone would like to solve. Computer firms have tried because they would like to see the success of computer against a master or grand master in this field, but nevertheless, this has not been achieved, and David Levy still doesn’t seem to think it will be achieved, and I agree with him.

Professor Don Michie: I think that I would like, if I might be allowed to utter a small warning here, dredged up from the remote past nearly a hundred years ago. It is, in fact, a very short excerpt that I want to read from a report. It was a report submitted on November the 15th, 1876, to the president of the United States Telegraph Company, and it goes as follows. Mr. G. G. Hubbard’s fanciful predictions, while they sound very rosy, are based upon wild-eyed imagination and a lack of understanding of the technical facts of the situation, and a posture of ignoring the obvious technical limitations of his device, which is hardly more than a toy, a laboratory curiosity. Mr. A. G. Bell, the inventor, is a teacher of the hard of hearing, and this telephone may be of some value for his work, but it has too many shortcomings to be seriously considered as a means of communication.

(Audience laughter and applause)

Professor Sir James Lighthill: If I had given my talk ten years ago, it would have been a very reasonable response to respond by quoting what was said about Bell’s inventions within a year or two after they’d started to be investigated. But the situation is different when one’s past the quarter century of a field like artificial intelligence. Then one comes into a period where some of the fundamental difficulties have begun to emerge. I have made it clear that I support a great deal of the work that is done by people calling themselves the artificial intelligentsia.

(Audience laughter)

Professor Sir James Lighthill: But I have also argued that enough of the fundamental objections and difficulties have now emerged so that one can feel dubious to a very high degree about predictions of a general purpose robot.

Host: Well, perhaps this is a good time to leave the artificial intelligentsia and get to the members of our audience, and ask them whether they’d like to make any points to Sir James or to the other principal speakers. There’s a question here.

Dr. Larkin: My name is Dr. Larkin. I work at the Department of Computer Science at the University of Warwick. I make robots. In particular, I make a robot called Arthur, who is mobile and blind, unlike Professor Michie’s, which is a hand-eye robot, although I must admit that Professor Michie moves the world rather than moving his robot in the world. Now, I call what I do with this robot psychomechanics. I prefer it. It’s one word rather than two of artificial intelligence, and probably describes more accurately what I’m trying to do. But this is a subject, as far as I’m concerned, which is firmly embedded in computer science. I’m in the computer science department, and the work I’m doing involves the kind of advanced programming that we’ve heard about, but the work I’m doing also affects our concepts of what a computer is, or rather, what a psychomechanism is, because a computer is not the beast for doing the job we’re talking about.

A computer is designed to do sums, and we have to look into the design of the machine we’re using to do the actual thinking part of the task, and see whether we can’t redesign that as well. And this is the area I’m, at present, very much concerned in. Now, when we get into the area of psychomechanisms, and that’s a psychomechanism. It’s fairly small, but if I use that as a computer, which I could do, it’s more powerful than the first computer I first worked on, a juice computer, and that used to fill a room. Now, psychomechanisms have some form of intelligence. I don’t think there’s any dispute about that, but there’s no spectrum of intelligence for robots the way there is a spectrum of intelligence for animals. For instance, my robot, Arthur, could be described as a literate dog. Now, just how general purpose is a literate dog?

Professor Sir James Lighthill: Well, I think if you really investigate the full range of psychological functions and capabilities of a dog, you’ll find it’s well ahead of all these robots.

Roger Needham (Cambridge University): I think one root of disagreement between Professor McCarthy and Professor Lighthill is that the one believes, and the other doesn’t, that there is, subject to study, of problem-solving and goal-seeking, which is quite independent of any particular sort of problem or any particular sort of goal. Now, it seems to me that it would be a good thing to help resolve this, this being an issue on which I myself, in some intellectual doubt, as to if we could have a list of the achievements in problem-solving and goal-seeking without any reference at all to what kind of problem or goal it is.

Professor John McCarthy: One achievement has been what I call the separation of heuristics from epistemology as a subject. Namely, the search processes are the heuristic, is the domain of heuristics, and the epistemology is the formalism that you use to represent information and describe the world. Another achievement is the Winograd achievement of showing that carrying semantic information as you go along is the key thing even in parsing a sentence. And as far as I’m concerned, this work basically refutes the Chomsky school of grammar, because it’s not merely general semantic information about the meanings of words which is being carried along, but information which is being used, but information about the particular situation in which the sentence is uttered.

Roger Needham (Cambridge University): I don’t think it’s necessary to go through the large list. Indeed, of course, I don’t deny the accuracy of what’s been said, and things like the involvement of semantics. I’m very glad that people who wish to make robots do things have learnt about that, because it’s been known for a very long time. All I would like to point out is that these are somewhat in the area of anecdotes, the swallows that might begin to make the summer, rather than beginning to look like the coherent structure of a coherent scientific discipline, which I gather Professor McCarthy claimed that this all was.

Professor John McCarthy: Okay, as to its coherence, well, it’s a bit weak now for a number of reasons. One of them is one might call the look-ma-no-hands school of programming, which says that you take something that no one has ever done before, and you write a program to do it, and you call your friends, and you write a paper, and they admire the fact that it did it, with no effort to connect this into any coherent theory. The other thing which inhibits theory in artificial intelligence is that it can be immediately checked out by whether it really does provide the behavior that it is supposed to. So the apparent existence of theories in fields like psychology is very often a mirage… (Audience Laughter) …and I would say that the theoretical situation in AI is very tough. And now I’ve been in this field, I don’t know, maybe 20 years, and I’m not discouraged yet. I don’t identify the rise of the science, and it’s reaching its peaks, with my own career. I imagine that the science will continue to grow, even after I am not actively making contributions to it.

Christopher Strachey (Oxford University): Thank Professor McCarthy. I’ve also been in the game about 20 years, nor am I discouraged. But I do not choose to work in the field of artificial intelligence, because I think it is too difficult. I would like to make a comment about Professor Michie’s anecdotal method of supporting work in a very difficult field by quoting a totally irrelevant, incorrect prophecy about Bell’s telephone a hundred years ago. A more recent incorrect prophecy was made about the ability to translate human languages by machines, and that turned out exactly the opposite way. The general opinion was that it would be possible to translate, or to have machine translation of human languages efficiently and economically. It turned out, in fact, of course, that it was rather cheaper to use a human being than to use any translation mechanism. I think it’s a mistake to confuse the intellectual difficulties with these fields. I think it’s to underestimate them. I’m a bit surprised, by the way, in which the people who seem to work on artificial intelligence come along and say, oh, well, we started off like this, and after quite a short time, we were horrified to find it was all rather difficult.

Professor John McCarthy: And Samuel’s program for the same game?

Christopher Strachey (Oxford University): Samuel’s program for the same game is an example of advanced automation.

(Audience laughter)

Host: Let’s just let Professor Strachey finish.

Christopher Strachey (Oxford University): I think Samuel’s program, and so I think would you, is an example of advanced automation, where he’s built into the program the properties of the game.

Host: Right, Donald Michie.

Professor Don Michie: I was just going to say that I think Professor Strachey is a little too modest, in that his own work on checkers in the 1950s was, in fact, the launching pad from which Samuel subsequently developed his checker learning program. Furthermore, Samuel’s program has played a worthy and useful role, and many people have learned many things from it. One, if I may just conclude, one of the most valuable roles it’s played in the general, rather than the technical area, is discrediting the cruder versions of the doctrine that you only get out what you put in, because eventually Samuel’s program learned to be a better checkers player than Samuel himself.

Christopher Strachey (Oxford University): Well, not, if I may come back on that, a better player than the people whose games he played into it. Now, I object very strongly to the miscellaneous and irresponsible use of words like learning, which have no very clear meaning. They are emotive terms. I do not believe that Samuel’s checkers player is, in any genuine sense, a learning program. It’s an optimizing program. I do not call optimizing programs learning programs. I mean, it’s not the place to go into technical details, but there’s a very great tendency, I find, with people working in the artificial intelligence field, to make, really, to spoil their case by using normal human terms, anthropomorphic terms, about very, very, very simplified objects, things like advice takers. The advice taker, the chess advice taker, is simply a programming system. It’s a more specialized advice taker than my ordinary programming language compiler and loader. That will take advice. It isn’t an advice taker. It’s a way of instructing the computer to do something. Now, I think to use the word advice taker when you mean a program is misleading.

Richard Parkins: Oh, yes. I’m Richard Parkins, and I’m a computer scientist. And it seems to me that Professor Lighthill and the artificial intelligentsia are arguing not about things, but about the names of things. Because whenever the artificial intelligentsia have produced what they consider to be a good example of artificial intelligence, Professor Lighthill has turned around and said, yes, that’s a marvelous piece of work, but it’s some other field.

Host: Do you want to answer that at this point?

Professor Sir James Lighthill: Well, it’s certainly true that I believe in having the minimum amount of philosophical mystification in talking about science. I agree with Professor Strachey that when we’re talking about programs, we should call them programs, and when we’re talking about brains, we should call them brains.

Host: You’ve been silent for some time, Richard Gregory. Do you want to say something?

Professor Richard Gregory: I’m a bit worried, so to speak, about the philosophical position here. When you say you can recognize that a problem is beyond science, I think you’re saying it’s beyond any future science. This is, I think, a bold claim. For example, alchemy, the transmutation of gold. I’m sure I can check on my facts here. I understand it was accepted as possible right through the Middle Ages, then it was damned by science, and then it was done with atomic power. I think I’m correct.

Host: There are many examples all through science. The need for a vital force, for example, in organic chemistry is one of the best. And inevitably, almost, they have been shown to be wrong, these restrictions. But I think…

Professor Sir James Lighthill: There are limits to how far forward in the centuries we can even contribute.

Host: You did, in fact, Sir James might have, did eventually say that it was not impossible, but it was highly improbable. This was the point, wasn’t it?

Professor Sir James Lighthill: I think that in practical terms, it’s a mirage, in the sense that if it’s something that we think we can see on the horizon, in the sense that on our deathbeds it may be announced or our children will see it, that it’s really there on the horizon, then I disagree with such a view.

Host: Well, we have half a dozen people wanting to talk, but I’m afraid time’s running out, and I must wind up the discussion, stimulating as it’s been. I’d like to thank all those who’ve taken part, and especially our principal speakers, Professor Mickey, Professor McCarthy, and Professor Gregory, for coming along tonight. Thank you.