Artificial intelligence and deep learning have revolutionized the modern world, powering everything from facial recognition and language translation to self-driving cars and medical diagnostics. At the heart of these advances is the artificial neural network (ANN)—a technology inspired by the human brain. But where did this idea originate?

The foundation for modern neural networks was laid in 1943 by two pioneering researchers, Warren McCulloch and Walter Pitts. Their groundbreaking paper, A Logical Calculus of the Ideas Immanent in Nervous Activity, introduced the first mathematical model of neural networks, demonstrating how biological neurons could be simulated with electrical circuits. This revolutionary concept paved the way for machine learning, artificial intelligence, and deep learning as we know them today.

This article explores the history, theory, and profound impact of McCulloch & Pitts’ neural network model on modern AI.

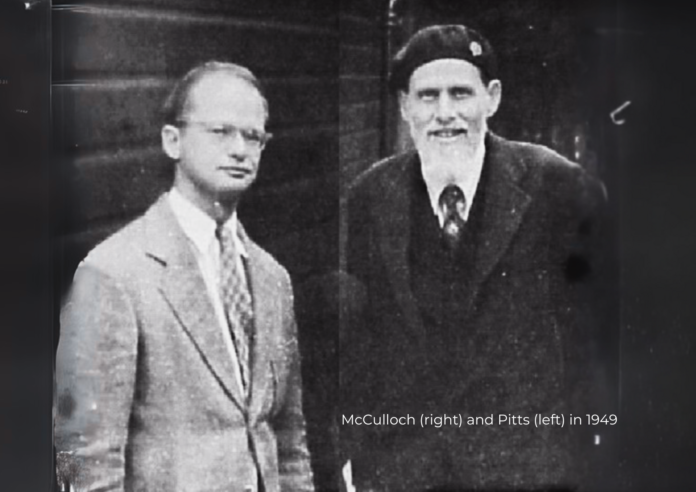

Who Were McCulloch and Pitts?

Warren McCulloch (1898–1969)

A neurophysiologist and psychiatrist, McCulloch was deeply interested in how the brain processed information. He wanted to understand how neurons functioned as a network and whether thought itself could be reduced to logical operations.

Walter Pitts (1923–1969)

A self-taught mathematical genius, Pitts was fascinated by logic, computation, and brain activity. He collaborated with McCulloch to apply Boolean algebra to neural networks, bringing mathematical rigor to the study of brain function.

Together, McCulloch and Pitts asked a bold question: Could human thought be represented by an electrical circuit? Their answer changed the course of computing history.

The McCulloch-Pitts Neural Model: A Logical Brain

In 1943, McCulloch and Pitts published a seminal paper proposing the first artificial neuron model, which simulated how biological neurons process information. Their model introduced key concepts still used in modern AI today.

1. How Their Model Worked

- They designed artificial neurons as binary units that were either “on” (1) or “off” (0), mimicking how biological neurons fire signals.

- Inputs from multiple neurons were weighted, meaning certain signals were more influential than others.

- If the total weighted input exceeded a threshold, the neuron would activate (fire). Otherwise, it would stay inactive.

This structure became the foundation of perceptrons and later deep learning neural networks.

2. Boolean Logic and Computation

McCulloch and Pitts realized that their neuron model could perform Boolean logic operations (AND, OR, NOT). This meant that neurons could be used for computing—a revolutionary idea at the time.

For example:

- AND function: A neuron would only activate if both inputs were active (1,1).

- OR function: A neuron would activate if at least one input was active (1,0 or 0,1).

- NOT function: A neuron could be designed to invert an input signal.

Their work showed that neural networks could be trained to recognize patterns and solve logical problems, laying the groundwork for modern machine learning models.

Why Was This a Breakthrough?

Before 1943, scientists had only a limited understanding of how neurons worked together to produce thoughts and actions. McCulloch and Pitts bridged neuroscience and computing, proving that:

Their model was decades ahead of its time and became the inspiration for machine learning, AI, and modern neural networks.

How Their Work Influenced AI and Computing

1. The Foundation of Artificial Neural Networks (ANNs)

McCulloch and Pitts’ model directly inspired Frank Rosenblatt’s Perceptron (1958), the first machine-learning algorithm based on neural networks. This laid the groundwork for deep learning used today.

2. The Birth of Cognitive Science and AI

Their ideas influenced early artificial intelligence researchers, including Alan Turing, John von Neumann, and Marvin Minsky. AI became a serious field of study in the 1950s and 1960s, partly thanks to their work.

3. The Neural Network Revolution in the 21st Century

After decades of stagnation, neural networks made a massive comeback in the 2000s. Today, McCulloch and Pitts’ ideas power:

- Deep learning models (GPT-4, DALL·E, etc.)

- Speech recognition (Siri, Google Assistant, Alexa)

- Computer vision (facial recognition, self-driving cars)

- AI-powered medical diagnostics

Without McCulloch and Pitts, AI as we know it would not exist.

Limitations and Challenges of Their Model

Despite its brilliance, their model had several limitations:

Nonetheless, their work paved the way for future innovations in neural network research.

The True Pioneers of AI

Warren McCulloch and Walter Pitts’ 1943 neural network model was one of the most important breakthroughs in artificial intelligence history. By proving that neurons could be simulated with electrical circuits and Boolean logic, they created the blueprint for machine learning, deep learning, and AI-driven automation.

Today, as neural networks power intelligent machines, self-learning AI, and deep learning models, we are witnessing the realization of McCulloch and Pitts’ vision—a world where machines can think, process information, and make decisions just like the human brain.